Thanks to everyone who participated in the CSGF 2010 Survey.With too many things going on this year, I didn’t conduct a survey at the 2011 Conference. But as people were asking about the survey, I thought I could at least make last year’s summary a bit more accessible. The following comes from the pdf report I submitted to Krell and a few example figures from data.This year’s survey was longer and broader, recieving over 90 responses from fellows and alumni. Thanks to everyone who wrote in useful comments from last year, many appear as multiple choice options this year. This survey started out of my own ignorance about many of the methods and languages discussed at my first CSGF conference. Without what I’ve picked up from other fellows my answers to most of the survey questions would have been “never heard of it.”

This year’s survey had three goals:

Identify computational usage patterns, languages, and skills of among the fellows and visualize how they break down by year in the fellowship, academic discipline, and other factors.

Quantify our adherence to good practice in software design, development, and documentation.

Explore our interest in Internet tools in a research context / Science 2.0 tools and practices.

Languages

The results were full of surprises. I was unable to come across any obvious trends in language use across either department or year. Overall usage patterns varied more between subjects than by years, but with all groups representing strong computational talent: over 40% have used DoE supercomputers, 70% of each year and each discipline have experience with parallel programming. Most use both a compiled and a scripting language. 25% of fellows have explored GPU computing. knowledge of LATEX and basic HTML are all but ubiquitous, while database use and more advanced web development platforms are mostly unknown. Students learn primarily from colleagues.

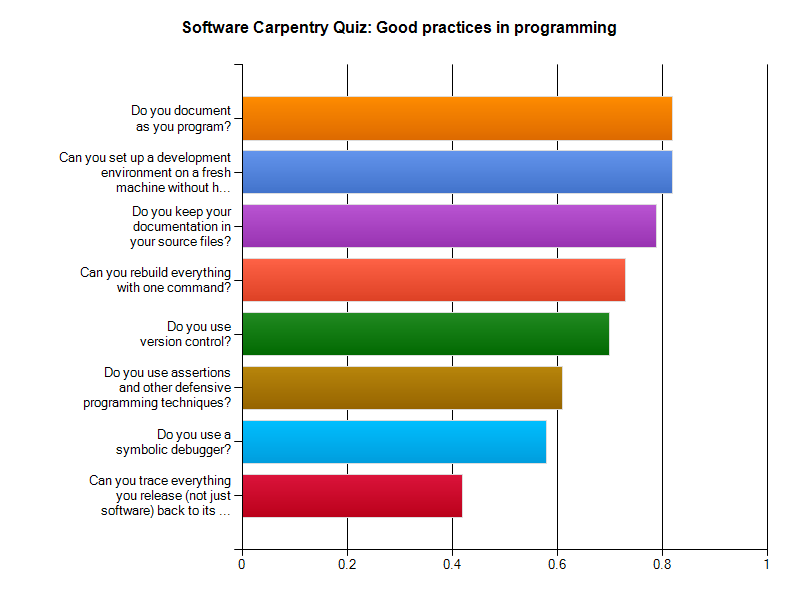

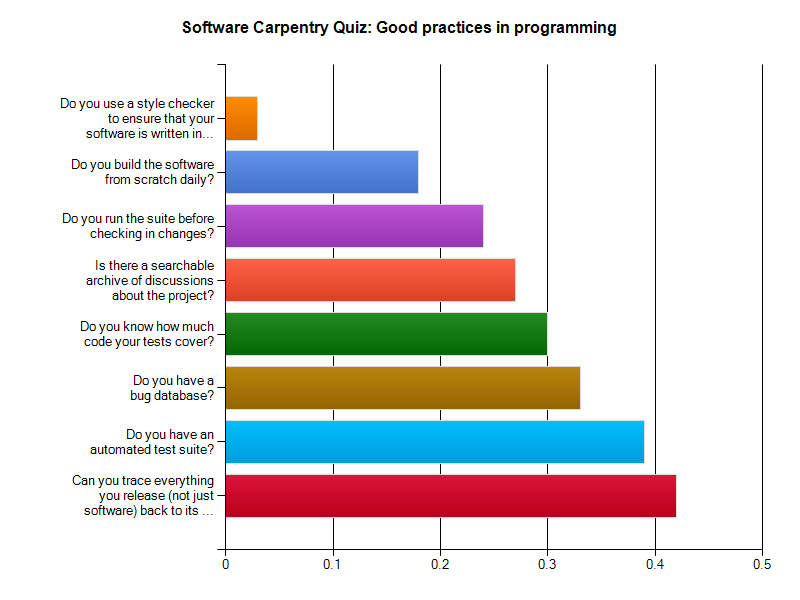

Software Practices

Scores on the good software practices quiz were reasonably high for scientists and mostly undifferentiated by year or discipline, scoring an average of 8 out of 16 points. We’re particularly good at documenting code (80%) and version management (> 60%) but almost no one uses a standards style checker or an automated test suite. Most fellows have experience in collaboratively writing software, working across disciplines and working across institutions. Some have experience on software development in large teams (> 10 researchers).

Unfortunately fellows were significantly less likely to publish in open access journals and even less likely post their publications on public archives than they were to publish in the non-open access literature. Fellows are somewhat more likely to release and distribute open source code. Fellows have explored little of the rapidly expanding set of internet resources for science. Few have learned to productively leverage wikis or other software for electronic notebooks, blogs for public com- munication of science, rss feeds for aggregating and sharing literature, forums for connecting to experts, or the social networking aspects that accompany these tools and connect researchers.

Science 2.0

Overall, we perform quite well in our knowledge of languages and parallelism, reasonably in our standards of good practice and are rather unambitious in our exploration of Science 2.0 tools. The survey did not explore numerical methods commonly learned and applied nor quantify the upper bound of computing scale (i.e. in number of processors in an application) reached by the fellows or the causes for those bounds (lack of need, resources, training, etc), but we’re deep into open source.

Get the data here.